Innovative 1:1 Compute

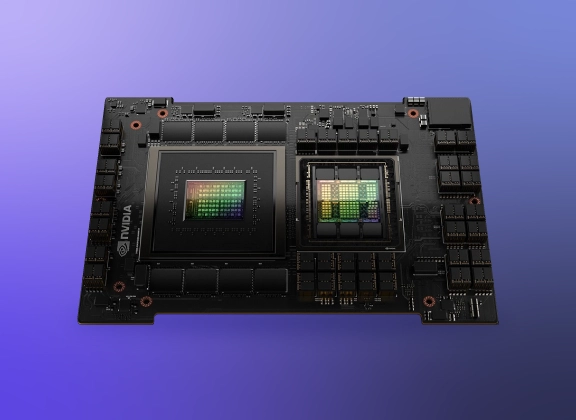

NVIDIA GH200 Grace Hopper introduces a new form of hardware; an interconnected 1-to-1 CPU-GPU processor and accelerator for performance and efficiency.

Unparalleled Bandwidth

NVIDIA NVLink-C2C interconnect enables 900GB/s bidirectional bandwidth, minimizing the data transfer bottlenecks between the Grace CPU and Hopper GPU.

Immense Scalability

NVIDIA NVLink Switch System scales nodes featuring GH200 by connecting 256 NVIDIA Grace Hopper Superchips to build a seamless, high bandwidth system.

Accelerated Servers featuring NVIDIA Grace Hopper GH200

- Processor: 1x NVIDIA Grace Hopper Superchip

- Drive Bays: 8x E1.S NVMe Hot-Swap

- Supports: Up to 3x PCI-E 5.0 cards

- Rack Height: 1U

- Processor: 1x NVIDIA Grace Hopper Superchip (Liquid Cooled)

- Drive Bays: 8x E1.S NVMe Hot-Swap

- Supports: Up to 3x PCI-E 5.0 cards

- Rack Height: 1U

- Number of Nodes: 2

- Processor: 1x NVIDIA Grace Hopper Superchip (Liquid Cooled)

- Drive Bays: 8x E1.S NVMe Hot-Swap

- Rack Height: 1U

Servers featuring Grace, NVIDIA's ARM based CPU

- Number of Nodes: 2

- Processor: 1x NVIDIA Grace CPU Superchip (Liquid Cooled)

- Drive Bays: 8x E1.S NVMe Hot-Swap

- Rack Height: 1U

ES2-7409204

Single NVIDIA Grace CPU Quad GPU 2U Server

- Processor: 1x NVIDIA Grace CPU Superchip

- Drive Bays: 8x E1.S NVMe Hot-Swap

- Supports: Up to 4x Double-Wide PCI-E 5.0 cards

- Rack Height: 2U

Powering Next-Gen AI

To facilitate new discoveries, NVIDIA develops a new computing platform to accelerate and power the next generation of AI. NVIDIA Grace Hopper Superchip enables a 1-to-1 CPU and GPU integration connected via NVLink to deliver a uniquely balanced, powerful, and efficient processor and accelerator suitable for AI training, simulation, and inferencing. With 7X the amount of fast-access memory with 7X the bandwidth NVIDIA GH200 speeds up AI jobs to new heights.

Two Groundbreaking Advancements in One

NVIDIA GH200 Superchip fuses the Grace CPU and Hopper GPU architectures using NVIDIA NVLink-C2C to deliver a CPU & GPU memory coherent, high-bandwidth, and low-latency superchip interconnect.

- NVIDIA Grace CPU is the first NVIDIA data center CPU featuring 72 ARM Neoverse V2 CPU cores and 480GB of LPDDR5 memory, Grace delivers 53% more bandwidth at one-eighth the power per GB/s for optimal energy efficiency and bandwidth.

- NVIDIA Hopper GPU utilizes the groundbreaking Transformer Engine capable of mixed FP8 and FP16 precision formats. With mixed precision, Hopper intelligently manages accuracy while gaining dramatic AI performance, 9X faster training, and 30x faster inferencing.

Have any Questions? We got answers.

Our experts are here to help you along the way in finding the right solution for you.