Introduction - What Are Vector Databases?

Vector databases are specialized systems designed to handle the storage and retrieval of high-dimensional vector representations of unstructured complex data - like images, text, or audio. By representing complex data as numerical vectors, these systems understand context and conceptual similarity, providing noticeably similar results to queries rather than exact matches, which enables advanced data analysis and retrieval.

As the volume of data in vector databases increases, storing and retrieving information becomes increasingly challenging. Binary quantization simplifies high-dimensional vectors into compact binary codes, reducing data size and enhancing retrieval speed. This approach improves storage efficiency and enables faster searches, allowing databases to manage larger datasets more effectively.

Understanding Binary Quantization

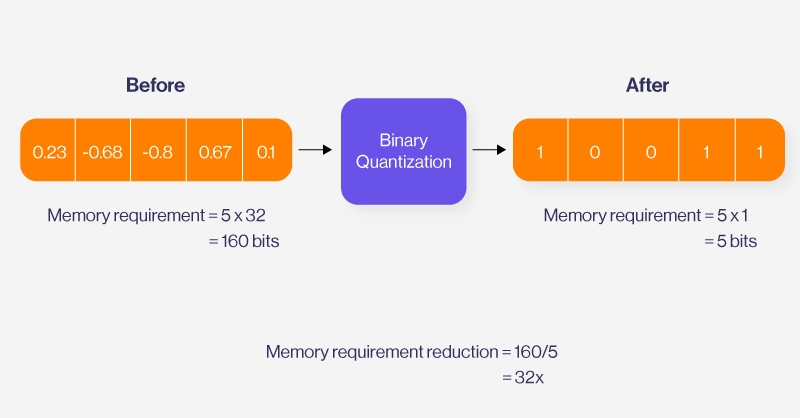

After the initial embedding is obtained, Binary Quantization is then applied. Binary quantization reduces each feature of a given vector into a binary digit 0 or 1. It assigns 1 for positive values and 0 for negative values capturing the sign of the corresponding number.

For example, if an image is represented by four distinct features where each feature holds a value in the range of FLOAT-32 storage units, performing binary quantization on this vector would convert each feature into a single binary digit. Thus, the original vector, which consists of four FLOAT-32 values, would be transformed into a vector with four binary digits, such as [1, 0,0, 1] occupying only 4 bits.

This massively reduces the amount of space every vector takes by a factor of 32x by converting the number stored at every dimension from a float32 down to 1-bit. However, reversing this process is impossible - making this a lossy compression technique.

Why does binary quantization work well for high-dimensional data?

When locating a vector in space, the sign indicates the direction to move while the magnitude specifies how far to move in that chosen direction.

In binary quantization, we simplify the data by retaining the sign of each vector component—1 for positive values and 0 for negative ones. While this might seem extreme, as it omits the magnitude of movement along each axis, it surprisingly works exceptionally well for high-dimensional vectors. Let's explore why this seemingly radical approach proves so effective!

Advantages of Binary Quantization in Vector Databases

Improved Performance

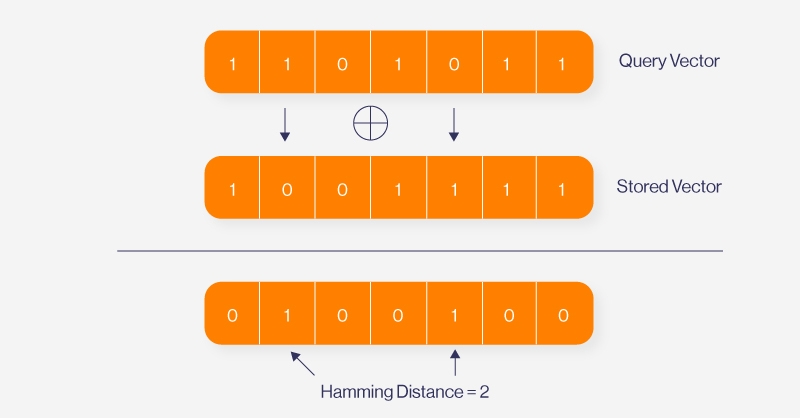

Binary quantization enhances performance by representing vectors with binary codes (0s and 1s), allowing for the use of Hamming distance as a similarity metric. Hamming distance is computed using the XOR operation between binary vectors: XOR results in 1 where bits differ and 0 where they are the same. The number of 1s in the XOR result indicates the number of differing bits, providing a fast and efficient measure of similarity.

This approach simplifies and speeds up vector comparisons compared to more complex distance metrics like Euclidean distance.

Enhanced Efficiency

Binary quantization compresses vectors from 32-bit floats to 1-bit binary digits, drastically reducing storage requirements, as illustrated in the figure above. This compression lowers storage costs and accelerates processing speeds, making it highly efficient for vector databases that need to store and manage huge amounts of data.

Scalability

We've already discussed how increasing dimensions reduces collisions in representation, which makes binary quantization even more effective for high-dimensional vectors.

This enhanced capability allows for efficient management and storage of vast datasets since the compact binary format significantly reduces storage space and computational load. As the number of dimensions grows, the exponential increase in potential regions ensures minimal collisions, maintaining high performance and responsiveness. This makes it an excellent choice for scalable vector databases, capable of handling ever-growing data volumes with ease

Challenges and Considerations

- Accuracy and Precision: While binary quantization significantly speeds up search operations, it impacts the accuracy and precision of the search results. Nuance and detail provided by higher-resolution data can be lost, leading to less precise results. Furthermore, Binary Quantization is lossy compression, meaning once the data has undergone quantization, the original information is irretrievably lost. Integrating binary quantization with advanced indexing techniques, such as HNSW, can help improve search accuracy while retaining the speed benefits of binary encoding.

- Implementation Complexity: Specialized hardware and software like SIMD (Single Instruction, Multiple Data) instructions are essential for accelerating bitwise operations allowing multiple data points to be processed simultaneously, significantly speeding up computations even in a brute force approach for similarity calculation.

- Data Preprocessing: Binary quantization assumes data to be in a normal distribution. When data is skewed or has outliers, binary quantization may lead to suboptimal results, affecting the accuracy and efficiency of the vector database.

- Metric Discrepancies: The binary quantizer uses the Hamming distance accurate for angular-based metrics like cosine similarity but contradicts metrics like Euclidean distance. Thus, it should be properly selected according to the application domain for measuring the distance between bits.

Future Trends and Developments

We can look forward to certain enhancements in binary quantization like the adjustment of thresholds based on data distribution to boost accuracy and incorporating feedback loops for continuous improvement. Additionally, combining binary quantization with advanced indexing techniques promises to further optimize search efficiency.

Applications of Binary Quantization in Vector Databases

- Image and Video Retrieval: Images and videos represent high-dimensional data with substantial storage demands. For instance, a single high-resolution image can have millions of pixels, each requiring multiple bytes to represent color information. Binary quantization compresses these high-dimensional feature vectors into compact binary codes, significantly reducing storage needs and enhancing retrieval efficiency.

- Recommendation Systems: Binary quantization enhances recommendation systems by converting user and item feature vectors into compact binary codes, improving both speed and efficiency. This can be further optimized by combining with nearest neighbor techniques like LSH, ensuring accurate recommendations through refined searches.

- Natural Language Processing (NLP): Binary quantization aids in processing and analyzing textual data by reducing storage requirements in the vector database, enabling efficient performance. This NLP technique allows for faster retrieval and comparison of text data, making chatbots more responsive and effective in handling user queries.

Conclusion

Binary quantization offers a powerful solution for handling the complexities of high-dimensional vector data in vector databases. By converting high-dimensional vectors into compact binary codes, this technique drastically reduces storage requirements and accelerates retrieval times.

Furthermore, its integration with advanced indexing methods can further enhance search accuracy and efficiency, making it a versatile tool in the evolving landscape of information retrieval. Vector databases used to store dimensional data can utilize fast storage hardware to accelerate your workload whether that is AI training or RAG-based applications. Configure a SabrePC Deep Learning server with ample storage drive bays to run your workload.