What is GDDR Memory?

GDDR stands for Graphics Double Data Rate and is a type of memory that is specifically designed for use in graphics cards. GDDR memory is similar to DDR memory, which is used in most computers, but it has been optimized for use in graphics cards. GDDR memory is typically faster than DDR memory and has a higher bandwidth, which means that it can transfer more data at once.

GDDR6 is the most recent memory standard for GPUs with a peak per-pin data rate of 16Gb/s. Found in the majority of GPUs including the NVIDIA RTX 6000 Ada and the AMD Radeon PRO W7900, GDDR6 is stable and still used in 2024 GPUs.

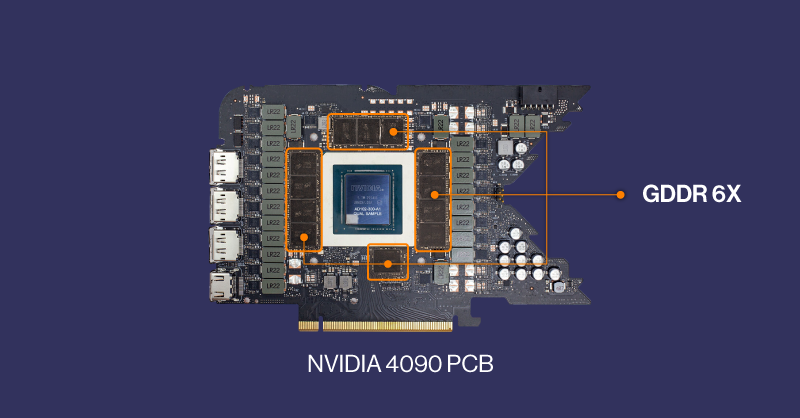

NVIDIA also has a collaboration with Micron developing GDDR6X which is sort of a successor to GDDR6. We say this because no hardware between the two has changed except the encoding from NRZ to PAM4 and is not recognized by the JEDEC industry standardization since NVIDIA is the sole user. DDR6X increases the per-pin bandwidth to 21Gb/s. GDDR7 is the next GDDR standard that should be widely adopted by all.

Both GDDR6 and GDDR6X have a max memory bus of 384-bits as of 2024. GDDR memory are individual chips that are soldered to the PCB surrounding the GPU die. Some GPU dies SKUs can have different memory capacities with a more modular and cost-effective design. See GPUs such as the NVIDIA RTX 4090’s 24GB GDDR6X memory and RTX 6000 Ada’s 48GB DDR6 ECC memory where they both use the AD102 GPU die but have different use cases based on memory configurations. There have even been some DIY cases where a skilled individual replaced 1GB GDDR6 memory chips to 2GB chips to effectively double their VRAM (though we highly do not recommend this as it can cause a host of issues).

What is HBM Memory?

HBM stands for High Bandwidth Memory and is a newer type of memory that has been developed specifically for use in GPUs. Funny how we say HBM memory which would say memory twice (High Bandwidth Memory memory).

HBM memory is designed to provide an even larger memory bus width than GDDR memory, which means that it can transfer even more data at once. A single HBM memory chip is not as fast as a single GDDR6 chip but that makes it more power efficient than GDDR memory, which can be an important consideration for mobile devices.

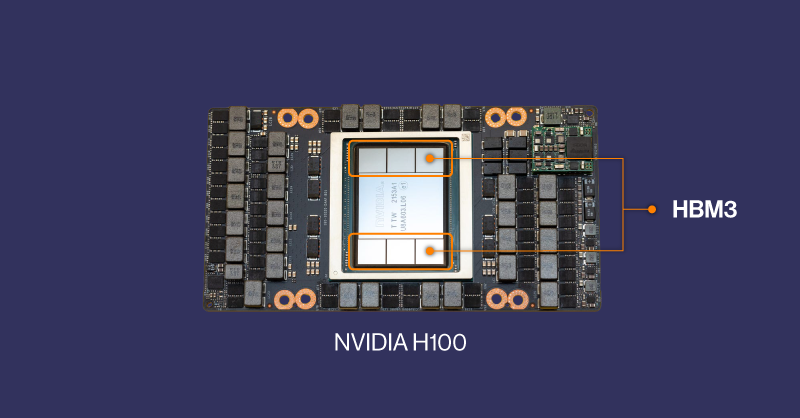

HBM memory sits inside the GPU die and is stacked – for example, with a stack of four HBM DRAM dies (4‑Hi) each with two 128‑bit channels per die for a total width of 1024 bits in total (4 dies * 2 channels * 128 bits). Because HBM memory is built into the GPU die as memory chiplet modules, there is less room for error and modularity. Therefore, a single GPU die SKU is not easily memory configurable like how GDDR equipped GPUs are.

The latest most adopted HBM memory is HBM3 found in NVIDIA H100 with a 5120-bit bus and over 2TB/s of memory bandwidth. HBM3 is also found in the rivalling AMD Instinct MI300X with an 8192-bit bus and over 5.3TB/s of memory bandwidth. NVIDIA also introduces the new HBM3e memory in their NVIDIA GH200 and H200 as the first accelerators and processors using HBM3e which features even more memory bandwidth. These HBM memory equipped hardware are ripping fast. A big reason for the need for HBM in accelerator GPUs like H100 and MI300X is the interconnectivity between multiple GPUs; to communicate between one another, the wide bus-width and fast data transfer rates are paramount in reducing the bottlenecks of transporting data from one GPU to the other.

GDDR vs. HBM Memory

So, which type of memory is better for GPUs? The answer is that it depends on the specific use case.

GDDR memory equipped GPUs are generally:

- More accessible since they are the mainstream GPU type

- Less expensive since the complexity of GDDR is soldered directly on the PCB instead of the GPU die.

- Most mainstream applications will not max out the memory bandwidth.

- But GDDR often uses more energy and not as efficient.

HBM memory equipped GPU are generally:

- Less accessible and more niche

- Highly expensive and found in flagship accelerators like the H100.

- Only used in HPC and highly niche workloads that require the most bandwidth

- Efficient and offer significantly larger bus-widths to parallelize the per pin rate.

Lets talk about workloads. Again, most applications won’t ever need HBM memory. Higher memory bandwidth is most important for workloads that leverage massive amounts of data. Workloads like simulation, real time analytics, dense AI training, complex AI inferencing all can benefit from the use more memory bandwidth.

It is also important to consider, the fastest GDDR equipped GPU can work just fine if the workload is parallelized between each other. The NVIDIA RTX 6000 Ada is a highly capable flagship GPU that is perfect for small to medium scale AI training, rendering, analytics, simulation, and data intensive workloads with a high memory bandwidth of 960GB/s. Slot a server or workstation with a multi-GPU setup and the work can be parallelized and split for even more performance (if applicable in application/workload).

However, HBM equipped GPUs like the NVIDIA H100 can significantly boost productivity (though at a high cost) for enterprise deployments that cannot value the time saved. More performance and less waiting can enable faster breakthroughs. High level deployments like ChatGPT and others leverage the use of a cluster of H100s working in tandem to perform real time inferencing and generative AI capabilities to millions of users at a given time processing prompts and delivering real time outputs.

Without blisteringly fast high bandwidth memory, and peak performance, enterprise deployments can become very slow and near unusable. A good example of this was the launch month of ChatGPT. ChatGPT and OpenAI probably felt they had enough HBM enabled NVIDIA GPUs to handle a large number of concurrent users but had no idea how popular their new generative AI chatbot was going to be. They had to set a cap on the concurrent user count asking site visitors to be patient with their service as they scaled their infrastructure. Not to mention, the ChatGPT was slow and sometimes unresponsive even for those granted access. However, in perspective, ChatGPT likely would not even be possible without the use of these high bandwidth memory interconnected GPUs.

Conclusion

In conclusion, both GDDR memory and HBM memory have their advantages and disadvantages. GDDR memory is less expensive and is a good choice for applications that require high bandwidth but don’t need the absolute highest performance. HBM memory, on the other hand, is more expensive but provides even higher bandwidth and is a good choice for applications that require the upper echelon of high performance. When choosing between these two types of memory, it’s important to consider the use case and cost, evaluating whether the time saved will make an impact in your workload.

If you have any questions when configuring your next workstation or high performance server? Contact our team to decide what's best for your deployment! Or explore out plethora of platforms featuring both GDDR and HBM equipped GPUs on our site.