We have talked about Underfitting and Overfitting a couple times before, but we don’t have a dedicated blog talking about the differences and explicit causes of these two phenomena that happens in training Machine Learning.

Machine learning is a complex field, with one of its most imperative challenges is building models that can predict outcomes for new data. Building a model that fits the training data perfectly is easy, but the real test is whether it can accurately predict outcomes for new data.

What is Overfitting?

Overfitting occurs when a model learns the training data too well, to the point where it starts capturing not only the underlying patterns and relationships but also the noise and random fluctuations present in the data. In simpler terms, an overfit model essentially "memorizes" the training data rather than truly understanding it with the ability to extrapolate to actual and test data.

Imagine you have a dataset with some inherent variability or noise. An overfitting model will try to fit itself so closely to each data point in the training set that it creates an intricate and convoluted decision boundary, effectively "overthinking" the problem. This level of complexity might seem like a good thing initially because the model achieves extremely high accuracy on the training data. However, it's a double-edged sword.

Introduce new, unseen data to the model for prediction or classification. Because the overfit model has essentially learned the quirks and idiosyncrasies of the training data, it will perform poorly on this new data as if the model has become too specialized, incapable of generalizing its knowledge to scenarios beyond the training set.

In essence, overfitting is like trying to fit a puzzle piece perfectly to one specific spot but realizing that it doesn't quite fit anywhere else in the puzzle. This is a problem because the ultimate goal in machine learning is to build models that can make accurate predictions or classifications on new, unseen data – not just on the data they were trained on.

.png)

What is Underfitting?

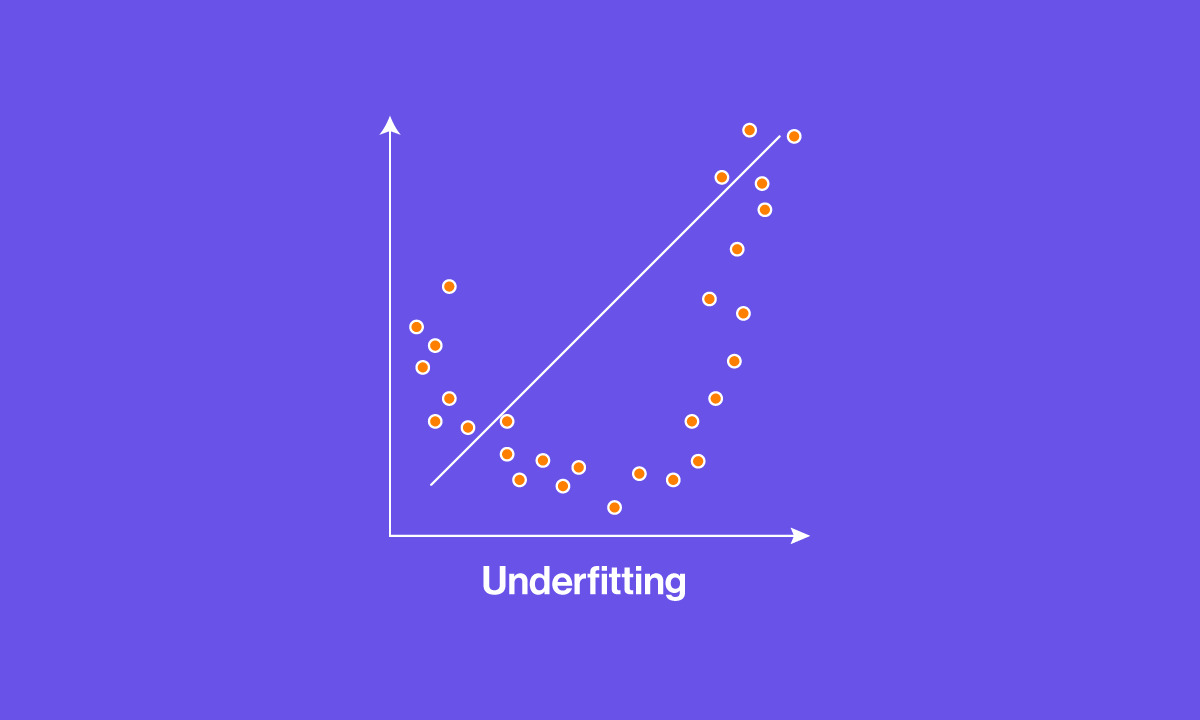

In contrast to overfitting, underfitting occurs when a model is overly simplistic and lacks the capacity to capture the underlying patterns, relationships, and nuances present in the training data. It has poor test data performance thus poor actual data performance as well.

Imagine trying to fit a straight line through a dataset that clearly exhibits a complex, nonlinear relationship. An underfitting model might make such a simplification, assuming that a linear model is adequate for describing the data. As a result, it fails to grasp the intricacies and subtleties in the dataset, resulting in a model that performs poorly not only on the training data but also on new, unseen data.

Underfitting can be likened to using a blunt instrument to solve a delicate problem. The model's inability to capture the underlying complexity makes it ill-suited for real-world applications where accurate predictions or classifications are essential.

Identifying underfitting is often easier than detecting overfitting because underfit models tend to exhibit consistently poor performance across both the training and test datasets. This suboptimal performance suggests that the model is too simplistic to be useful in practical scenarios.

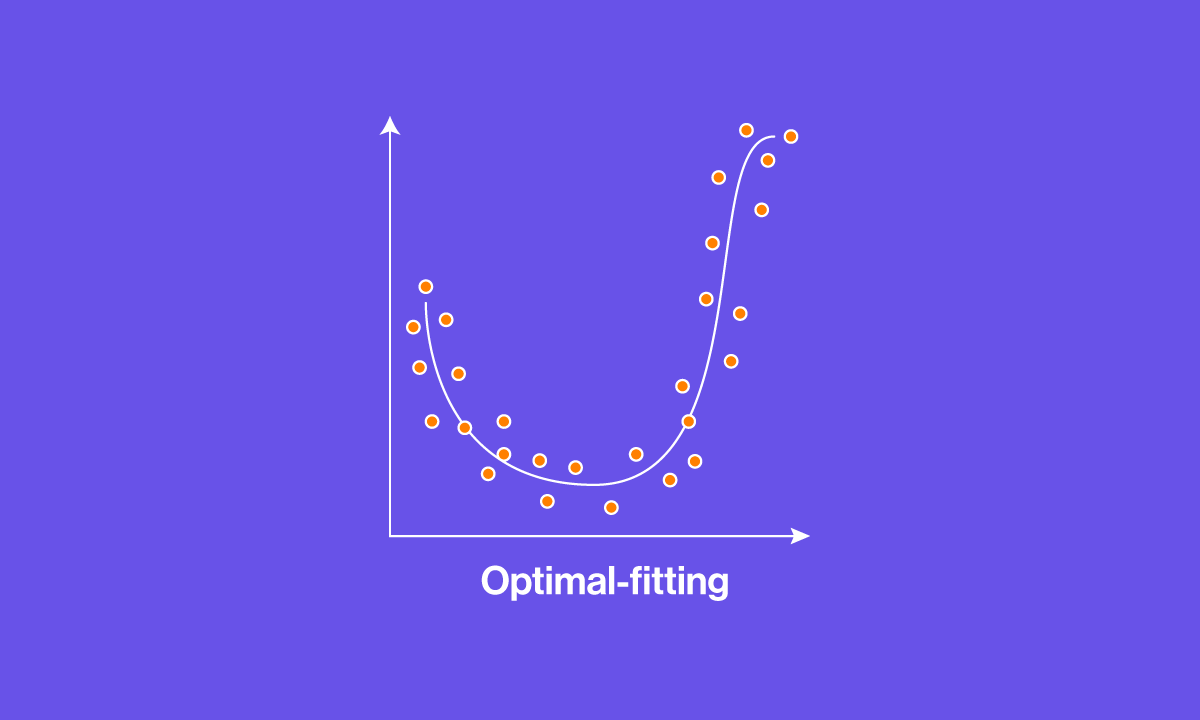

Optimally Fitting and Good Generalization

Good generalization signifies a model's ability to extend its learned knowledge beyond the confines of the training data. In simpler terms, a well-generalized model is not just a quick study of the data it's seen; it's a profound learner capable of discerning and internalizing the underlying patterns and relationships present in the data.

Imagine you're teaching a child to recognize different breeds of dogs. You wouldn't want the child to memorize specific dogs but rather learn the distinguishing characteristics that define each breed. That way, when the child encounters a new dog breed they haven't seen before, they can still make an educated guess based on their understanding of the common traits.

Similarly, a machine learning model exhibiting good generalization can take its knowledge of the training data and apply it successfully to new, unseen data. This is a critical attribute because the real test of a model's worth lies in its ability to provide accurate predictions, classifications, or insights in practical, uncharted scenarios.

Achieving good generalization requires navigating a delicate balance. On one side of the spectrum, we have overfitting, where a model becomes overly complex, effectively "memorizing" the training data and failing to adapt to new situations. On the other side, we have underfitting, where a model is overly simplistic and lacks the capacity to capture the richness of the data. It is important to note that alleviating underfitting is easy to detect and easy to address but the challenge occurs when your model overfits and you are in charge of taming and tuning the model.

Striking this balance involves several key considerations:

- Model Complexity: The model must be complex enough to capture essential patterns in the data but not so complex that it starts fitting noise and random variations.

- Feature Selection: Choosing the right features or input variables is crucial. Including irrelevant or redundant features can lead to overfitting, while omitting important ones can result in underfitting.

- Hyperparameter Tuning: Properly configuring hyperparameters like learning rates, regularization strengths, and tree depths can significantly impact a model's generalization capabilities.

- Validation and Testing: Regularly evaluating the model's performance on validation and test datasets helps ensure it's not just memorizing the training data but genuinely learning to generalize.

To address underfitting, it's essential to consider increasing the model's complexity appropriately. This might involve using more advanced algorithms, incorporating additional features, or tuning hyperparameters to strike a balance between simplicity and the ability to capture the essential features of the data. Achieving this balance is critical for building models that can provide meaningful insights and accurate predictions, making them valuable tools in various machine learning applications.

To tackle overfitting, we need to strike a balance between model complexity and its ability to generalize. This balance is crucial for creating models that can adapt to different situations, making them truly valuable in practical applications.

Explore our blogs on Epochs, Batch Size, Iterations and Mistakes You Want to Avoid in AI Development

Have any questions? Looking for hardware to power a machine learning model?

SabrePC stocks valuable computer components from professional GPUs to fast NVMe storage perfect for your on-premises machine learning solutions.

Contact us today for more information!