An Introduction to the Most Common Neural Networks

Neural networks have become extremely popular in the past years, but there is still some confusion understanding their nuanced differences. For developing an robust AI model, it is imperative to understand the characteristics of various types of neural networks and the problems they excel at solving.

We will discuss the six popular neural network architectures that everyone should be familiar with when working in AI research with a couple bonus architectures as well! By familiarizing yourself with these neural network architectures, you can gain a better understanding of the different types of neural networks and their applications in AI research.

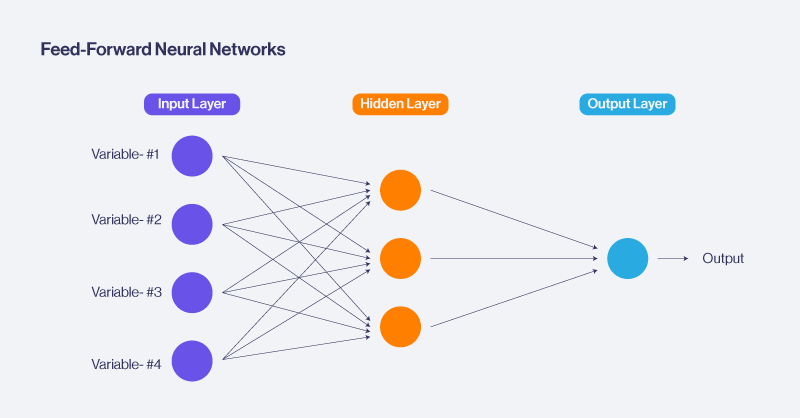

1. Feed-Forward Neural Network

Feedforward type of neural network is the foundational architecture that other Neural Networks are based off of, which we will talk about later.

A neural network essentially consists of an input layer, multiple hidden layers and an output layer. FNNs do not have any feedback connections, thus no loops or connections are present that allow information to be fed back into previous layers. This is the most basic type of neural network that came about in large part to technological advancements which allowed us to add many more hidden layers without worrying too much about computational time. It also became popular thanks to the discovery of the backpropagation algorithm by Geoff Hinton in 1990.

Feedforward neural networks (FFNs) are one of the first major developments in the field of neural networks. They laid the groundwork for more complex architectures that followed. Here’s a brief overview of their historical significance:

Feed-forward neural networks are generally suited for supervised learning where the network is presented with input-output pairs with numerical data, and the weights of the connections are adjusted iteratively to minimize the difference between the predicted output and the actual output.

FNNs are sufficient for extremely lightweight tasks, but struggle with complex data relationships hence the development of more specialized neural networks. This brings us to our first two neural networks: Convolutional Neural Networks and Recurrent Neural Networks.

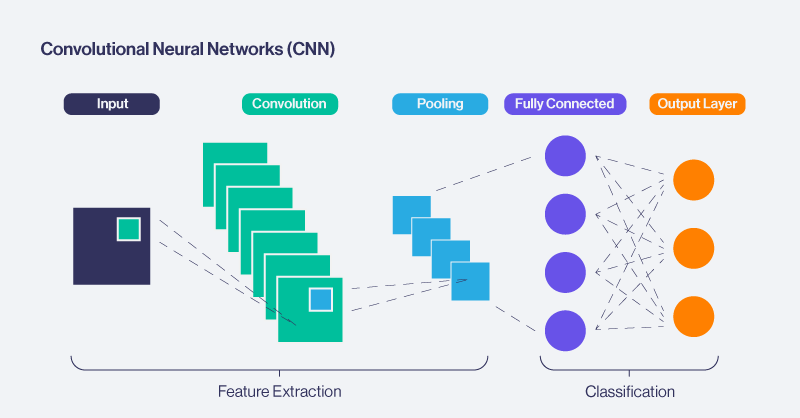

1. Convolutional Neural Networks (CNN)

A Convolutional Neural Network (CNN) is a type of artificial neural network designed for processing structured grid data, such as images. CNNs are particularly effective in computer vision tasks, where the goal is to recognize patterns and extract features from visual data.

CNNs can be thought of as automatic feature extractors from the image. CNNs effectively uses adjacent pixel information to down sample the image first by convolution and uses a prediction layer to re-predict and reconstruct the image. Unlike traditional neural networks, CNNs are equipped with specialized layers, such as convolutional layers and pooling layers, that enable them to efficiently learn hierarchical representations of visual data.

This concept was first presented by Yann le cun in 1998 via LeNet-5 for handwritten digit recognition where he used a single convolution layer to predict digits which consisted of convolutional layers, subsampling layers (equivalent to pooling layer) and fully connected layers. After a period of popularity in Support Vector Machines CNNS were reintroduced by AlexNet in 2012. AlexNet consisted of multiple convolution layers to achieve state of the art image recognition while being computed on GPUs. The use of GPUs to execute highly complex algorithms and extracting distinct features fast made them an algorithm of choice for image classification challenges henceforth.

CNN's are also used as the underlying architecture for many Object Detection algorithms like YOLO, RetinaNet, Faster RCNN, Detection Transformer. While CNNs are powerful for image related tasks, they require large datasets for training and finetuning.

2. Recurrent Neural Networks (LSTM/GRU/Attention)

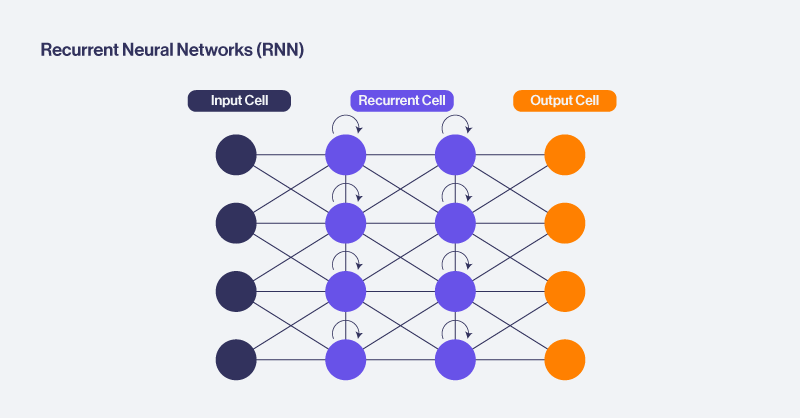

Recurrent Neural Networks (RNNs) stand out in the neural network landscape for their unique ability to process sequential data dynamically ideal for natural language processing (NLP) and time series analysis. The distinctive feature of looping connections in RNNs enables the network to maintain an internal memory or hidden state to capture dependencies and patterns.

What CNN means for images Recurrent Neural Networks are meant for text. RNNs can help us learn the sequential structure of text where each word is dependent on previous words, or sentences ideal for language translation, sentiment analysis, and text generation. (Though transformers have taken over here. More on them later!)

Below is the expanded version of an RNN cell where each RNN cell runs on each word token and passes a hidden state to the next cell. For a sequence of length 4 like “the quick brown fox”, The RNN cell finally gives 4 output vectors, which can be concatenated and then used as part of a dense feedforward architecture like below to solve the final task Language Modeling or classification task:

Long Short-Term Memory networks (LSTM) and Gated Recurrent Units (GRU) are a subclass of RNN, specialized in remembering information for extended periods (addressing the Vanishing Gradient Problem) by introducing various gates which regulate the cell state by adding or removing information from it.

RNNs/LSTM/GRU have been predominantly used for various Language modeling tasks where the objective is to predict the next word given a stream of input Word or for tasks which have a sequential pattern to them. If you want to learn how to use RNN for Text Classification tasks, take a look at this post.

Before we get into the next neural network, we have to mention a little about attention mechanisms. Certain words help determine the sentiment of text excerpt more than others or while some words (like adjectives) may have negative connotation may not be a negative sentence altogether especially in slang and colloquial text. With LSTM and deep learning methods, we can take care of the sequence structure, but we lose the ability to give higher weight to more important words. So, using an attention mechanism to extract words that are important to the meaning of the sentence can aggregate the representation of those informative words to form a sentence vector that is weighted and interpreted accurately by a computer.

Spoiler: Transformers Models have become more suitable for language processing, so why use an RNN? Let’s reiterate the sequential nature of RNNs. When looking at data that is dependent on previous data, RNNs perform best with long dependencies that can be difficult to track for traditional “if/then” statements. RNNs also work better with information over time. RNNs, since their model is sequential by nature, are less compute intensive and responsiveness when data is fed to them in real-time. For smaller data sets where there is less need for the number of parameters and fine tuning (like found in Transformer Models), RNNs perform better since they would be less susceptible to overfitting.

3. Transformer based LLMs

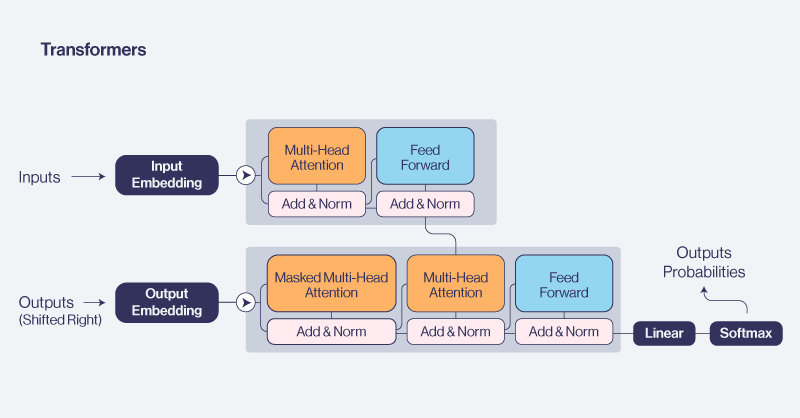

Transformers have become the DeFacto standard for any Natural Language Processing (NLP) task, and the recent introduction of the GPT-3 transformer is the biggest yet.

In the past, the LSTM and GRU architecture, along with the attention mechanism, used to be the State-of-the-Art approach for language modeling problems and translation systems. The main problem with these architectures is that they are recurrent in nature, and the runtime increases as the sequence length increases. That is why for smaller datasets, they do well, but larger data sets, they struggle. That is, these architectures take a sentence and process each word in a sequential way, so when the sentence length increases so does the whole runtime.

Transformer, a model architecture first explained in the paper Attention is all you need, omits the recurrence and instead relies entirely on an attention mechanism to draw global dependencies between input and output simultaneously over the entire dataset. And that makes it fast, more accurate and the architecture of choice to solve various problems in the NLP domain working with more words and more complex text-based tasks.

Consider a conversation with a Transformer based model such as ChatGPT. It takes into account the entire text prompt coupled with a memory over a large conversation lending to its ability to carry conversations and answer accurately based on the topics talked about previously. The use of an attention mechanism enables a transformer model like ChatGPT lets it reduce the number of words parsed, highlight the importance of certain words, capture relationships between words all over the given text, and deliver a compelling output with the topics presented.

There are various parameters and encodings that can modulate a transformer model for positional encoding, improving memory, advanced attentiveness to certain keywords, and more. In summary, transformers have redefined the landscape of deep learning by introducing a highly parallelizable and scalable architecture, fostering breakthroughs across diverse domains. The self-attention mechanism, coupled with the ability to process input sequences in parallel, makes transformers a powerful and flexible choice for various machine learning tasks working with text, image process, and speech recognition.

Transformer models can be further improved using RAG where you employ a vector database for referencing relevant information to provide context to a prompt. Retrieval-Augmented Generation (RAG) enhances transformer-based LLMs by integrating them with external knowledge retrieval systems. While transformers generate coherent text, they are limited to training data, which may be outdated or incomplete. RAG addresses this by dynamically retrieving relevant information from external sources and incorporating it into the model’s responses. This approach improves accuracy, reduces hallucinations, and ensures outputs are up-to-date and contextually grounded, making it especially valuable for tasks like question answering, summarization, and domain-specific applications.

Many new LLMs and chatbots utilize a transformer based architecture such as GPT-4o, Mistral, Claude, Perplexity, and more.

4a. Generative Adversarial Networks (GAN)

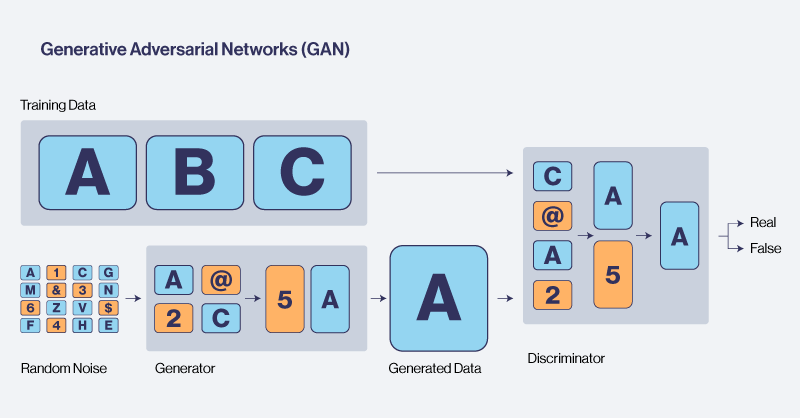

Generative Adversarial Networks (GANs) are a class of artificial intelligence models introduced by Ian Goodfellow and his colleagues in 2014. GANs operate on a unique principle of adversarial training, where two neural networks, the generator and the discriminator, engage in a competitive process to create realistic synthetic data.

GANs consist of a generator, tasked with creating realistic data, and a discriminator, responsible for distinguishing between real and synthetic data. The generator continually refines its output to fool the discriminator, while the discriminator improves its ability to differentiate between real and generated samples. This adversarial training process continues iteratively until the generator produces data that is indistinguishable from real data, achieving a state of equilibrium.

Perhaps it's best to imagine the generator as a robber and the discriminator as a police officer. The more the robber steals, the better he gets at stealing things. At the same time, the police officer also gets better at catching the thief.

The losses in these neural networks are primarily a function of how the other network performs:

- Discriminator network loss is a function of generator network quality: Loss is high for the discriminator if it gets fooled by the generator’s fake images.

- Generator network loss is a function of discriminator network quality: Loss is high if the generator is not able to fool the discriminator.

In the training phase, we train our discriminator and generator networks sequentially, intending to improve performance for both. The end goal is to end up with weights that help the generator to create realistic-looking images. In the end, we’ll use the generator neural network to generate high-quality fake images from random noise.

GANs have found diverse applications in numerous fields. In computer vision, they generate lifelike images, aiding in tasks like image-to-image translation and style transfer. GANs have revolutionized the creation of synthetic data for training machine learning models, proving valuable in domains with limited labeled data. Additionally, GANs contribute to the generation of realistic scenes for video game design, facial recognition system training, and even the creation of deepfakes where fake faces are created to mimic a real person.

GANs may suffer from mode collapse, where the generator produces limited types of samples, failing to capture the full diversity of the underlying data distribution. GAN training can be challenging, requiring careful tuning, and is susceptible to issues such as vanishing gradients and convergence problems.

4b. Diffusion Models

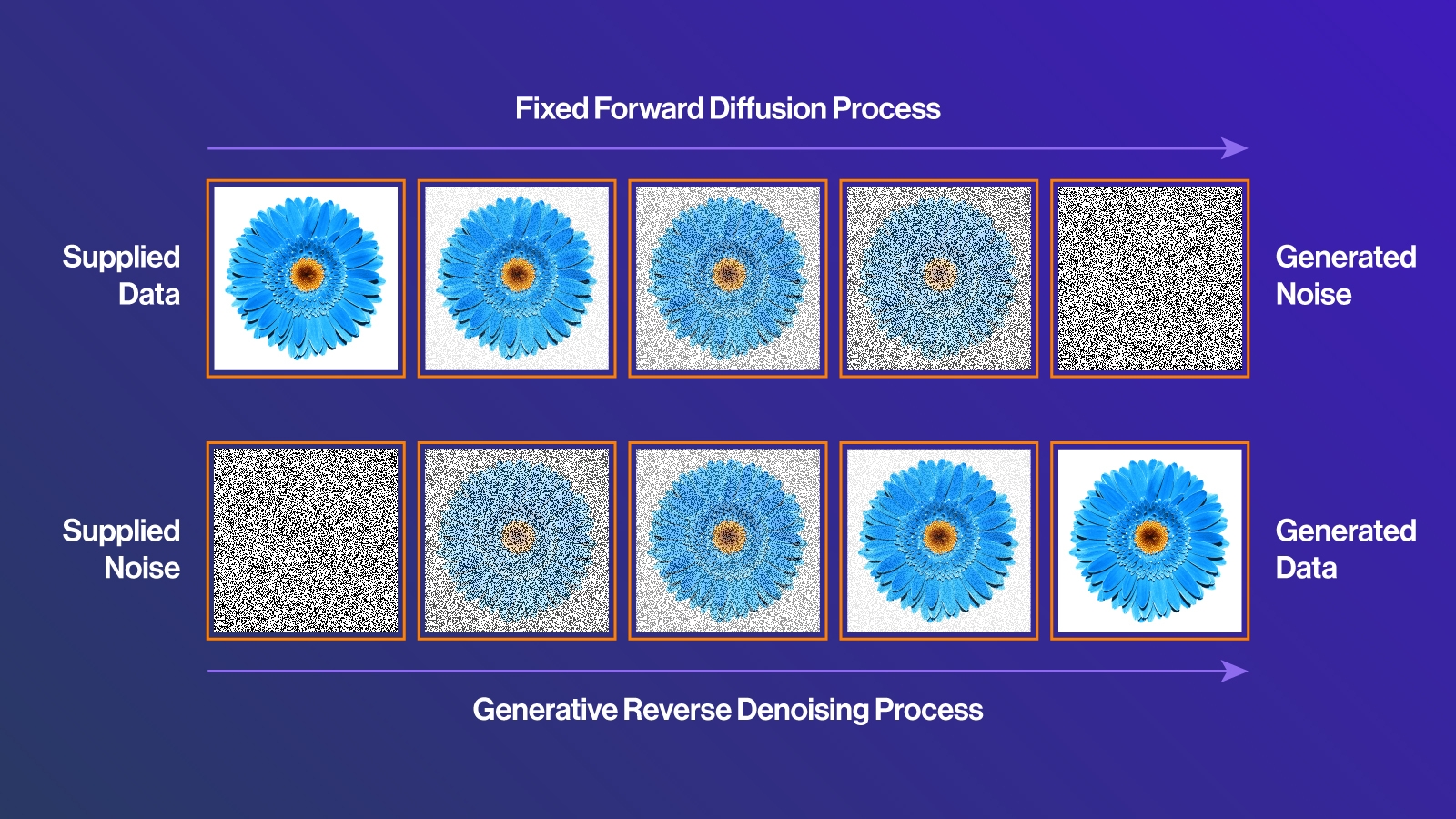

While diffusion and GAN models are vastly different, they overlap in their use case so we wanted to lump them together. Diffusion models are a groundbreaking approach to generative modeling that create high-quality data by reversing a process of noise addition. Inspired by physical diffusion, these models gradually transform random noise into structured outputs like images, videos, or molecular designs. The process begins by corrupting data with incremental noise during training, teaching the model to reconstruct the original data step by step. When generating new data, the model starts with pure noise and iteratively refines it into a coherent result.

This iterative refinement enables diffusion models to capture complex data distributions with exceptional fidelity and diversity. Unlike traditional generative methods like GANs, diffusion models are more stable during training and avoid common pitfalls like mode collapse, where outputs lack variety. However, their step-by-step process can make generation slower and computationally demanding compared to GANs.

Diffusion models have already shown transformative potential in applications such as image synthesis, where they power systems like Stable Diffusion to create stunningly realistic visuals from textual prompts. They’re also gaining traction in scientific fields, helping researchers design molecular structures or simulate dynamic systems. By combining robustness, precision, and versatility, diffusion models are redefining what’s possible in generative AI, making them a cornerstone of modern machine learning.

A good distinction between GANs and Diffusion is the type of generated data you are aiming to create. GANs excel at generating a set of similar images of limited variation like hundreds of fake faces found on ThisPersonDoesNotExist.com. Diffusion models are better are being creative and perform better for ideation and inspiration.

5.Reinforcement Learning

Reinforcement Learning (RL) is a machine learning paradigm where an agent learns to make decisions by interacting with an environment. Unlike supervised learning, which relies on labeled data, RL focuses on trial-and-error learning. The agent explores actions, receives feedback in the form of rewards or penalties, and refines its strategy to maximize cumulative rewards over time.

At the core of RL is the Markov Decision Process (MDP) framework, which consists of states, actions, rewards, and a policy:

- States: Represent the environment's current condition.

- Actions: Decisions or moves the agent can take.

- Rewards: Signals indicating the quality of the agent's actions.

- Policy: A strategy that maps states to actions.

Reinforcement Learning is particularly suited for tasks where the problem involves sequential decision-making, environment can be simulated, and there is no clear training dataset. RL is ideal for scenarios requiring the optimization of long-term outcomes, such as planning in robotics, game-playing, or financial portfolio management. Applications like autonomous driving or video game AI leverage simulated environments where supervised learning isn’t an option because the correct outcomes are unknown or cannot be labeled easily. RL allows learning through experience, making it valuable in uncertain or dynamic contexts.

Reinforcement Learning excels in environments where other learning paradigms struggle, particularly when the solution depends on balancing exploration (trying new actions) and exploitation (leveraging known successful actions). These new actions are dynamically trained and adaptable. Success will depends on maximizing cumulative rewards, such as in supply chain optimization, scheduling problems, or energy grid management.

However, RL may not be the best fit for every problem. It requires significant computational resources, is sensitive to poorly designed reward signals, and can struggle in environments where exploration is too costly or unsafe, such as certain real-world medical or industrial applications.

By understanding when and where to apply RL, researchers and practitioners can unlock its potential to solve complex, interactive problems that traditional methods cannot address effectively.

6. Autoencoders

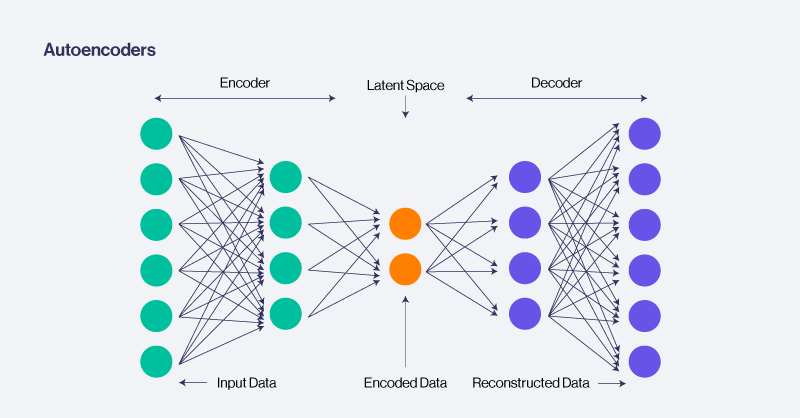

Autoencoder neural networks are unsupervised learning models designed for data encoding and decoding. Consisting of an encoder and a decoder, these networks learn efficient representations of input data, compressing it into a lower-dimensional space and then reconstructing it faithfully.

Autoencoders are employed in image and signal compression, reducing the dimensionality of data while preserving essential features. They can also be employed in anomaly detection by learning the normal patterns in data, autoencoders can identify anomalies or outliers, making them valuable for cybersecurity and fault detection. Autoencoders also aid in learning hierarchical representations of data, contributing to feature extraction for subsequent machine learning tasks.

Their applications span various domains, offering advantages in data compression, anomaly detection, and feature learning. They don’t require labeled data for training and operate unsupervised which makes them applicable in scenarios where labeled data is hard to obtain. While unsupervised learning can lead to overfitting, with the right encoding dimensions can ensure a reliable and powerful Autoencoder model.

Conclusion

Neural networks have revolutionized the field of machine learning, offering specialized architectures like CNNs, RNNs, Transformers, GANs, Diffusion Models, Autoencoders, and Reinforcement Learning to tackle diverse and complex challenges. Each model is uniquely suited to specific tasks—whether it’s image recognition, sequential data processing, text generation, anomaly detection, or decision-making in dynamic environments. Selecting the right model for the right use case is crucial to achieving optimal performance, as no single architecture can address every problem effectively.

Model | Use Case |

Convolutional Neural Network | Image Classification, Segmentation, and Detection |

Recurrent Neural Network | Sequential Data and Time Series Analysis |

Transformers | Natural Language Processing, Text Generation, Knowledge Base |

General Adversarial Networks | Limited Variation Image and Data Generation |

Diffusion Models | Creative Image and Video Generation |

Auto Encoders | Feature Extraction, Compression and Denoising, Anomaly Detection, Recommendation Systems |

Reinforcement Learning | Robotics, Autonomous Vehicles, Optimization, Scientific Research |

Beyond model selection, hardware considerations play a pivotal role in neural network performance. High-performance GPUs are essential for handling the computational demands of large models like Transformers and Diffusion Models, which thrive on parallel processing for tasks like NLP and generative content creation. Conversely, more lightweight architectures like Autoencoders or smaller CNNs can operate efficiently on edge devices and lower GPU compute for use cases like real-time anomaly detection or embedded vision systems.

In today’s rapidly evolving AI landscape, understanding the strengths and limitations of each neural network architecture, along with the right hardware to deploy them, is key to unlocking the full potential of machine learning across industries. Choosing wisely not only enhances performance but also ensures efficient use of resources, enabling scalable, impactful AI solutions. Read our blog on the recommended hardware for training AI.